In today's story, I will be talking about one of the roles of data product manager which he\she plays in making a data platform as a product, for example, a modern data stack. Yes, the data products should not be assumed to be a designed backend system or a frontend interface with fancy eye candy tabs and features. when it comes to data products they mostly have paths filled with tables and schemas and further these components are getting accumulated as downstream components getting consumed for advanced analytics layer, visualization, creating a dashboard for monitoring, and business insights.

On a very high level, here we will talk about how the product data pipeline is getting framed and organized for downstream usage in any digital solution space.

I am coming back to PM talking to PMs, and why so we know that we have a big group of PMs working for a standard software product solution for solving customer problems. But nowadays mostly we see applications are getting blended with analytics and AI\ML highlight and that is obvious as the world is completely aware how bringing data in any solution makes a big difference in terms of creating value for customers, generating business revenue, making the company an embodiment of digitalization.

Sagacious data professionals are coming up with all-new ways of developing data stacks, they are using the best possible tools and data architecture to establish a robust platform. Mostly they will talk about extraction, transformation, loading, consuming for analytics in a core technical way... And at this stage, the product managers sans data\AI\ML core acumen are having a void created to understand the product's way ahead. It's like a sailor can’t be talking sprinter language for his act.

And hence comes the Data Product manager in the picture who will be owning the knowledge sharing on how the solution for data product is getting framed and integrated with the mainstream application for the solution. Let's see what they talk about, it will be mostly around the architecture of the pipeline whether it's ETL pipeline or any advanced analytics pipeline where ML model getting trained and prediction of the target variable is happening.

The data product manager will be a translator for the process involving extracting and loading from the source system to the destination schema, how this will be built, operated, and maintained, and the transformation, modeling, and validation within the destination is orchestrated.

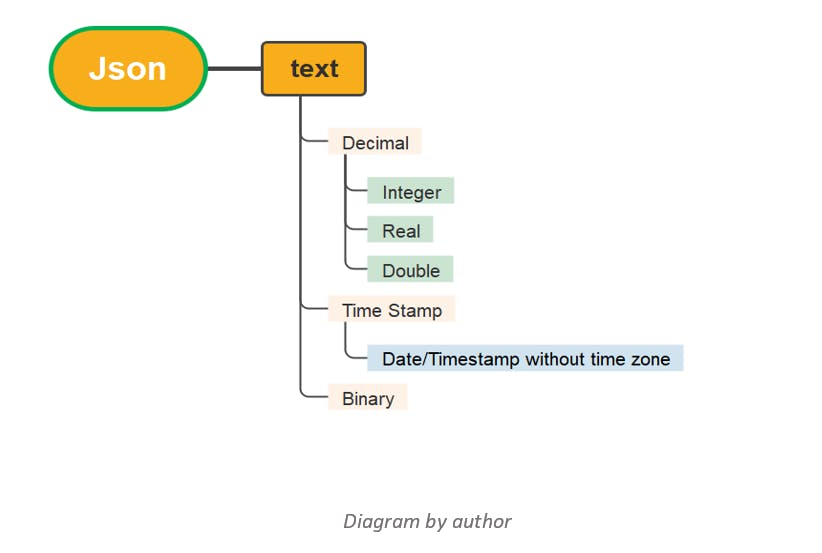

They will emphasize the handling of data types in the files and source data. How is the ETL process getting designed so that it will have the capability of handling all standard data types across the data repository? Their discussion will always be about the flexibility of the solution on automating the data type selection while extracting from source and getting the requisite transformation done on data and writing on destination based on the supported data types. This data process paradigm is something not commonly known to seasoned product managers and then data PM is bridging the gap

They will talk about the ideation of transforming data types that the target destination does not support. Explaining the data editing and cleaning process which are needed to ensure that it is in the best possible format for use within the decoded destination. These definitive modifications are quite broad and helpful to all the customers.

In the data pipeline, the connector plays a very vital role, hence it's been graded as one of the most pivotal features. I will put a few lines very intrinsically for the connector's presence as a feature in the pipeline.

As the name speaks in volume it's meant for connecting to the data source and this can be done using the inhouse created solution, using data lake connection APIs like from Snowflake, Azure blob, AWS redshift, and its a plethora of connectors readily available for making it part. Nowadays companies are creating customized connectors, for example, Fivetran is a modern data stack solutioning group that connects to the data sources using two different types of connectors: push and pull.

- Data is actively retrieved, or pulled, from a source through pull connections. Fivetran establishes a regular connection with a source system and downloads data from it.

- Source systems deliver data to Fivetran as events using push connectors like Webhooks or Snowplow. They buffer the events in the queue when they receive them in the collection service. In the cloud storage buckets, the event data as JSON is saved.

The data product manager will cover the fundamentals of :

Ingestion and Preparation of Data.

loading and writing process of data

Transforming and mapping of data to the destination

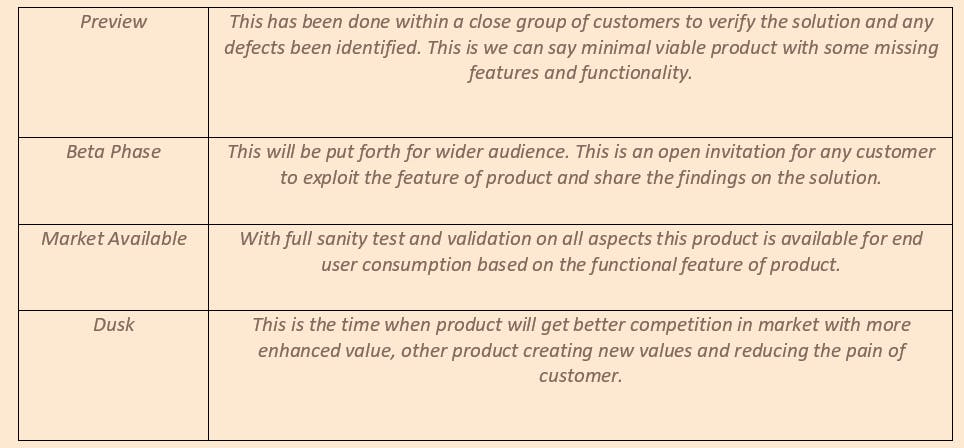

And finally comes the release stages of the data pipeline features or tools created to streamline and enhance the data pipeline functionality and operations for end-user consumption.

Personas for the Data product mostly are Data engineers, Data scientists, Research scientists, Business analysts, Machine learning engineers, MLops engineers, and many end-user customers who know how to utilize processed data.

So with this, I am concluding the story and what I have put here is a very small chunk of the data product manager's work. There are so many aspects still there to be unveiled, hence will keep on bringing points and use cases to share the experience of a DPM.

SO IT'S DPM TALKS TO PM